Abstract

This research paper delves into the pervasive and evolving challenges posed by deepfake technology, exploring its implications on individuals, society, and legal frameworks. It discusses the blurred lines between reality and manipulation, highlighting the accessibility of deepfake tools to both skilled and unskilled individuals. The paper emphasizes the multifaceted threats, from manipulating individuals for financial scams to influencing political campaigns and spreading misinformation.

It scrutinizes the legal landscape, noting the absence of comprehensive regulations and the impact of such content on privacy and human dignity. The Indian perspective is examined, detailing relevant sections of the Information Technology Act, IPC, and existing gaps in legal provisions.

Internationally, the paper explores the approaches taken by countries such as the United States, China, and European Union to tackle deepfake challenges. It scrutinizes copyright issues, with the World Intellectual Property Organization proposing criteria for copyright protection based on the distortion of an individual’s life. The research concludes with a recent case, Akshay Tanna v John Doe & Ors, illustrating the legal actions taken against the misuse of identity and deepfake content for investment scams. Overall, this paper underscores the urgency of a comprehensive and adaptive legal framework to address the growing threats posed by deepfake technology.

Keyword

Deepfakes, Information Technology Act, cyber security. Artificial intelligence, copyright issues

Introduction

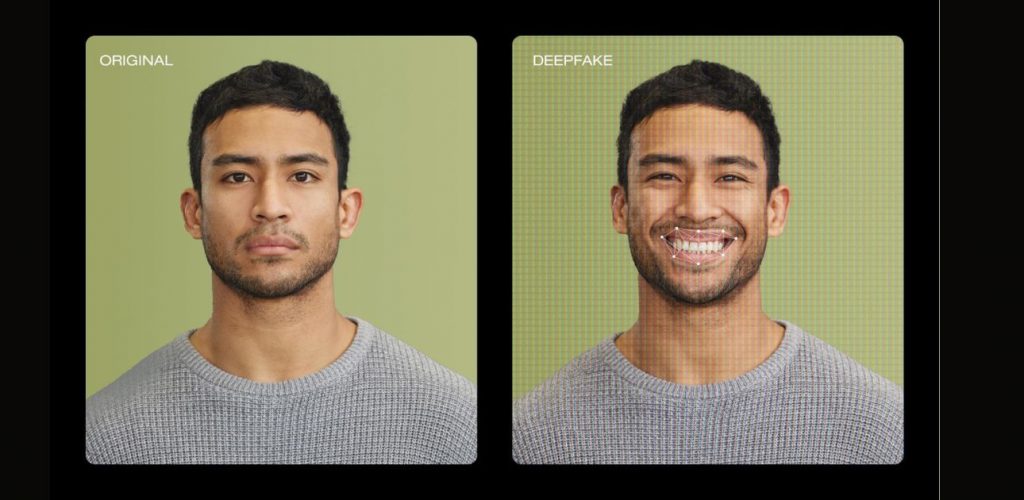

The alteration of media in a way obscures the lines between what is real and what is not. Over time, we have come to notice that there are certain benefits to it but there are also many drawbacks to it. It can be used to manipulate and sabotage individuals, damage elections and to spread mass misinformation.

Even though applications like Photoshop have existed for a significant amount of time, what makes AI-generated deepfakes, when used with wrong intentions, even more sinister is the accessibility of it. Deepfakes can be issued by skilled and unskilled people alike.

“The tools to create and disseminate disinformation are easier, faster, cheaper, and more accessible than ever”, according to The Deep Trust Alliance.

The detection of deepfakes has also turned into a tedious task due to the increase in its accessibility.

Deepfakes are mostly utilized in a way to disrupt reality, thus it is one of our time’s growing dangers. An AI startup Deeptrace found that 96% of the deepfakes circulating were pornographic, and most of its targets were women.

This is a new development in revenge porn that we are seeing happen in current times. Recently, Pop Megastar Taylor Swift was a victim of thousands of her deep fake photos being circulated on X . In the inaugural application of AI-generated deepfakes in political campaigns back in 2020, a sequence of videos featuring Bharatiya Janata Party (BJP) leader Manoj Tiwari circulated across various WhatsApp groups. These videos portrayed Tiwari making accusations against his political adversary Arvind Kejriwal in both English and Haryanvi languages, just ahead of the Delhi elections. Concurrently, another instance involved a manipulated video of Madhya Pradesh Congress chief Kamal Nath that gained widespread attention, causing uncertainty regarding the fate of the State government’s Laadli Behna Scheme.

In May of the previous year, a deepfake video featuring Ukrainian President Volodymyr Zelenskyy urging his compatriots to disarm gained widespread attention following a cybercriminal intrusion into a Ukrainian television channel.

These deepfakes are being created by skilled and unskilled people alike, Government might start indulging in this as well to overthrow opposition or to undermine extremist groups.

One of the major setbacks that is being faced in regards to deepfakes is that there is a legal vacuum in regards to the ways in which the growth of these deepfakes can be regulated.

Some social networking sites have put some restrictions on the circulation of deepfakes, like in 2020 Facebook banned deep fake videos around the time of the US elections, and more recently, after the spread of Taylor Swift’s deepfakes twitter stopped showing any search results for her name.

But legally, we are yet to see any significant development in the legal growth of the regulation of deepfakes.

Research Methodology

The research paper employs secondary sources, encompassing blogs, newspapers, articles, research papers, and websites. Its descriptive nature offers a comprehensive exploration of the multifaceted challenges posed by deepfake technology, drawing insights from diverse media and expert analyses to provide a thorough understanding of the subject matter.

Review of Literature

The term “deepfake” first surfaced in 2017 on Reddit, where users began overlaying celebrities’ faces onto different individuals, particularly within adult content. The article adopts a broad definition of deepfake, encompassing various manipulations consistent with the popular understanding. In this context, a deepfake typically involves creating a video using sophisticated technical methods to depict an individual saying or doing something they did not.

Detecting such manipulations proves challenging, as applications extend to realistic-looking videos generated without advanced technology, high-quality videos featuring nonexistent individuals, fake audio or text fragments, and manipulated satellite signals. This inclusive perspective is considered essential for legal and policy considerations, prioritizing outcomes over specific technical methods.

According to the 2019 study done by an AI startup called Deeptrace, it was found that 96% of the deepfakes were pornographic and 99% were targeting women. There is a very clear and substantial impact on women’s safety due to the rise of deepfakes. Creating revenge porn has become must easier than it was before, any Tom and Harry with an internet connection and enough malice can ruin the life of women.

When Taylor Swift’s deepfakes were circulated the Congress was alarmed and the conversations to pass a No AI FRAUD bill were held. According to ABC news, The No AI FRAUD Act, if passed, would create a federal jurisdiction that will:

“Reaffirm that everyone’s likeness and voice is protected and give individuals the right to control the use of their identifying characteristics.

Empower individuals to enforce this right against those who facilitate, create and spread AI frauds without their permission.

Balance the rights against First Amendment protections to safeguard speech and innovation.”

Rep. Dean, “at a time of rapidly evolving AI, it is critical that Congress creates protections against harmful AI. My and Rep. Maria Salazar’s No AI FRAUD Act is intended to target the MOST harmful kinds of AI deepfakes by giving victims like Taylor Swift a chance to fight back in civil court.”

This paper utilizes the help of various newspapers such as The Hindu, the Wire etc, to underline the impact of deepfakes in our society. According to them, deepfakes pose a significant threat to the autonomy of individuals. Deepfakes are also a large hindrance to ethics and the right to privacy of individuals. This paper highlights how India and other nations are dealing with deepfakes and the copyright issues that are arising out of it.

The Indian Perspective

In case of deepfake crimes that include the capture, publication, and transmission of a person’s image in information media that trespasses their right to privacy, Section 66E of the Information Technology Act, 2000 is applicable. An offense of this nature is punishable with up to three years of incarceration or a fine of 2 lakh rupees.

Section 66D of the IT act punishes those who utilize devices of communication or resources of computers with an intent that is malicious, and it manifests into either impersonation or cheating. If an offense is made under this provision then the penalty adds up to three years of incarceration and/or a fine of 1 lakh rupees.

Also, Sections 67,67A and 67B of the IT Act can be utilized in the prosecution of those who publish or transmit deepfakes that are either sexually explicit or obscene in nature.

Section 67 of the IT Act talks about the punishment that will be imposed for ‘publishing or transmitting obscene material in electronic form.’ This act further says that legal consequences will follow anyone who shares publishes or allows the distribution of explicit, voyeuristic, or inappropriate content that is passed through electronic channels for the prevention of corruption and moral degradation of those exposed to it. For a conviction of the first time, individuals could incur a fine of up to 5 lakh rupees and a maximum prison sentence of three years. If there is a second or their next offense, the maximum penalty that they could get stretches to 5 years in prison and a fine of 10 lakh rupees.

Section 67A talks about ‘Punishment for publishing or transmitting of material containing sexually explicit act, etc., in electronic form.’ It says that if individuals on their first conviction, publish, transmit or instigate the publication or transmission of material in the form of electronic media that contains sexually explicit acts or conducts, they may face imprisonment for a term of up to 5 years and they do it again or the time of their subsequent conviction, the penalty would elevate to a term of 7 years of imprisonment and of a fine of up to ten lakh rupees.

Section 67B talks about ‘Punishment for publishing or transmitting of material depicting children in sexually explicit act, etc., in electronic form.’ It says that any individual that publishes transmits or creates explicit material that involves children online, enticing minors into explicit online relationships, or facilitating child abuse, will face imprisonment of up to 5 years and a fine of up to ten lakh for the first conviction. Exemptions are applied for materials that are justified in the interest of science, literature, art learning, heritage, or religious purposes. ‘Children’ are individuals below 18 years of age.

What the IT act additionally prohibits is the hosting of ‘‘any content that impersonates another person’ and requires social media platforms to quickly take down ‘artificially morphed images’ of individuals when changed.

If they are unable to take down such content, they endanger the ‘safe harbor’ protection- which constitutes a provision that safeguards social media companies from regulatory liability for third-party content that is shared by users on their platforms.

There are provisions under the Indian penal code, 1869 (IPC) that can also be used to fall back on in cases relating to cybercrimes associated with deep fakes- Section 509 ‘words, gestures, or acts intended to insult the modesty of a woman’, 499 (criminal defamation), and 153 (a) and (b) ‘spreading hate on communal lines’ among others. An FIR against unknown persons has been registered invoking Section 465 (forgery) and 469 ‘forgery to harm the reputation of the party’ in the Mandana Case. Explicit deepfakes of Bollywood Actress Rashmi Mandana circulated on the internet recently.

The Copyright Act of 1957 can be used if any image that is copyrighted is used in the creation of deepfakes. Section 51 prohibits the- ‘the unauthorised use of any property belonging to another person and on which the latter enjoys an exclusive right.’

In the aftermath of the anger over the deepfake video of Rashmi Mandana, the Union of Ministers of Electronics and Information Technology, Ashwini Vaishnaw on November 23 conducted a meeting with social media platforms, AI companies, and industries where he conceded that “a new crisis is emerging due to deepfakes” and that “there is a very big section of society which does not have a parallel verification system” to address this issue.

India currently does not have a comprehensive framework for data protection and data privacy. Personal Protection Data Bill, 2019 was introduced in Lok Sabha by the Minister of Electronics and Information Technology, Mr Ravi Shankar Prasad, on December 11, 2019. The bill protects the data of people and it creates a Data Protection Authority for the same.

Personal data is the data of individuals that is characterized by their distinct traits and attributes that can be utilized to separate them from everyone else. Personal data includes your biometric data, financial data, religion, etc, anything that is distinct to you or makes your identity is your personal data. The purpose of the Data Protection Authority would go towards protecting the interest of individuals, and to divert the misuse of personal data, and ensuring compliance with the Bill. It consists of a chairperson and six members, they should have at least 10 years of experience. The bill changed some aspects of the Information Technology Act , of 2000 to delete the provisions related to compensation payable by companies for the failure to protect personal data.

The Minister of State for Electronics and Information Technology, Rajeev Chandrasekhar has said that the laws that exist are sufficient to manage deepfakes if their enforcement is followed strictly. An advisory was dispatched to various social media firms calling upon Section 66D of the IT Act and Rule 3(1)(b) of the IT rules, reminding them of their obligation to remove such content within the stipulated timeframes following the regulations laid down.

The utilization of deepfakes to escalate misinformation about the government or with the purpose of enticing hatred and discontent against the government is a consequential issue and can have significant repercussions. Under Section 66-F ‘cyber terrorism’ and the Information Technology ‘Intermediary Guidelines and Digital Media Ethics Code’ Amendment Rules, 2022 of the Information Technology Act, 2000 these crimes can be prosecuted. Also, Section 121 waging war against the Government of India and Section 124-A of the Penal Code, 1860 could be engaged within this regard.

The non-consensual utilization of an individual’s images for deep fakes infringes upon the right to privacy as outlined in Article 21. In the case of KS Puttaswamy v. Union of India, the Supreme Court established that privacy safeguards informational autonomy, human dignity, and control of personal data. However, the absence of a specific legal framework makes it challenging for the victims to pursue remedies for such violations.

International Perspective

A limited amount of countries, like Australia, South Africa, and Britain, have enacted laws that address deepfake porn, although it is not explicitly termed as such. The recent enactment of the Online Safety Act of Britain carried a maximum penalty of two years in jail. In South Korea, where K-pop stars have often been targeted, the production of deepfake porn for profit can result in a maximum seven-year jail term. In the United States, federal law is absent on deepfake porn, with approximately 10 states introducing a diverse range of legislation. Some states like California have not criminalized deep fake porn, allowing for civil lawsuits.

In October of 2023, US President Joe Biden signed an extensive executive order on Artificial Intelligence to handle the many risks that come with it and it dealt with a variety of issues like national security to privacy. The Department of Commerce had the significant task of establishing the standards that would label AI generated media to ensure easier detection, this is also known as watermarking.

The states like California and Texas have passed laws that would ensure the criminalization of the publishing and distribution of deep fake videos that were created with the intent of influencing the outcome of the elections. A law was imposed in Virginia that was concerned with criminal penalties for the distribution of non-consensual deepfake pornography.

The DEEP FAKES Accountability Bill, 2023 recently introduced in Congress required the the creators of it to label them on all the various social media and online platforms and they must notify of the alteration made to the original content. criminal sanction would be met if they fail to signify such ‘malicious deepfakes’

In China, the cyberspace administration unfurled new regulations that would restrict the utilization of ‘deep synthesis technology’ to curb misinformation. Any doctored content must be explicitly labeled and must be traceable to its original source. Local laws must be abided by the ‘deep synthesis service providers’, they must be ethical and they must ensure the maintenance of ‘correct political direction and correct public opinion orientation.’

The European Union has intensified its ‘Code of Practice on Disinformation’ , which ensures that the social media mammoths like Google, Meta, and Twitter start ‘flagging’ the deepfake content that is present on their platforms. The initial introduction of the code was as a voluntary self-regulatory instrument in the year 2018. The Digital Services Act is aimed at increasing the screening of digital platforms in order to curb various sorts of misuse. Under the EU AI Act, deepfake providers are subjected to requirements that include transparency and disclosure.

Bletchley Declaration was signed by 28 nations, it is concerned with AI manipulation.

Copyright Issues

The World Intellectual Property Organization, in its December 2019 publication, navigated the complexities of the deepfake media. WIPO recognized the escalating intricacies that are associated with deepfakes, which go beyond the archetypal copyright infringement to encompass a violation of human rights, privacy, and personal data protection. This portrays the need for an in-depth assessment of whether copyright should be extended to deep fake imagery. It is proposed by WIPO that if deep fake content substantially distorts the subject’s life, it should not be eligible for copyright protection.

It is emphasized by WIPO that copyright alone is not the most efficient mechanism for combatting deepfakes due to the lack of provisions for the victim’s interest. In reference to Article 5(1) of the EU General Data Protection Regulation (GDPR), which mandates the accuracy and currency of personal data. Swift removal or correction of false deepfake media is encouraged by WIPO.

Furthermore, even if the deepfake content is factually accurate, individuals have the option to utilize the ‘right to be forgotten’ that has been outlined in Article 17 of the GDPR which enables the prompt deletion of personal data. WIPO advocates a dual strategy, incorporating personal data protection rights, is advocated by WIPO as a more efficacious approach to addressing the diverse challenges presented by deepfake content. WIPO enforces the imperative for a holistic strategy that extends beyond conventional copyright frameworks to protect individuals from the negative consequence of deep fake technology.

Recent Case

Akshay Tanna v John doe & ors.

The Delhi high court issued a recent directive for the removal of social media profiles, links and groups that are exploitative of Akshay Tanna’s identity, reputation, and goodwill for the perpetrating investment scams. Various profiles and groups, nearing the name of Akshay Tanna from KKR (Kohlberg Kravis Roberts), emerged on the platforms such as telegram, urging individuals to join and make payments for purported investments. Additionally, a deepfake video impersonating Akshay Tanna was created to attract potential victims.

Recognizing the extensive misuse of his identity and the fraudulent activities conducted by unknown parties in the realm of investments, Akshay Tanna initiated legal action against John Does, Telegram, and others in the Delhi High Court. Following a thorough examination of the case, the Court granted an ex-parte injunction in favor of the plaintiff, instructing Telegram and other platforms to remove the identified profiles and groups. Moreover, a restraining order was issued, prohibiting any further exploitation of Akshay Tanna’s persona.

Suggestion and conclusion

The current upsurge of deepfakes has posed a significant threat to the safety and security of individuals. Without proper regulations it can violate the rights of people in the society. The government must propose the development of a robust legal framework that addresses the creation of deepfakes, their distribution, and misuses. We must advocate for strict penalties and quick legal recourse against perpetrators.

Some countries are working around deepfake by imposing regulations like the requirement to obtain the consent of those in their videos. Countries like China have taken this approach. In Canada they have utilised the help of mass public awareness campaigns to curb the creation and distribution of deepfakes with a nefarious purpose. The addition of watermarks to the content can also help identify the source of it and can protect an individual to some degree.

In the contemporary digital era, the imperative for robust legal foundations becomes paramount, particularly in the context of advanced technologies like deepfakes.

Sidra Noor

Delhi Metropolitan Education, Affiliated to Guru Gobind Indraprastha University, Noida

Batch 2023- 2028

REFERENCES

- Tom Simonite, Most Deepfakes Are Porn, and They’re Multiplying Fast , wired (OCT 7, 2019 10:00 AM),

- Regina Mihindukulasuriya, Why the Manoj Tiwari deepfakes should have India deeply worried the Print, (29 February, 2020 02:04 pm IST https://theprint.in/tech/why-the-manoj-tiwari-deepfakes-should-have-india-deeply- worried/372389/

- Vishnukant Tiwari, MP Police Registers 4 FIRs After Deepfake Videos Target PM Modi, Kamal Nath, The Quint, (29 Nov 2023, 6:49 PM IST) https://www.thequint.com/news/india/madhya-pradesh-police-registers-fir-deepfakes-pm-modi-kamal-nath

- Bobby Allyn, Deepfake video of Zelenskyy could be ‘tip of the iceberg’ in info war, experts warn, npr.org, (MARCH 16, 20228:26 PM ET) https://www.npr.org/2022/03/16/1087062648/deepfake-video-zelenskyy-experts-war-manipulation-ukraine-russia

- Mallory Moench, Taylor Swift Searches Blocked by X Amid Circulation of Deepfakes, Time, (JANUARY 28, 2024 2:53 PM EST) https://time.com/6589487/taylor-swift-searches-blocked-x-twitter-deepfakes-response/

- Leah Sarnoff, Taylor Swift and No AI Fraud Act: How Congress plans to fight back against AI deepfakes, ABCnews, (January 30, 2024, 10:06 PM) https://abcnews.go.com/US/taylor-swift-ai-fraud-act-congress-plans-fight/story?id=106765709

- https://indiankanoon.org/doc/112223967/

- https://indiankanoon.org/doc/121790054/

- https://indiankanoon.org/doc/1318767/

- https://indiankanoon.org/doc/15057582/

- https://indiankanoon.org/doc/176300164/

- https://indiankanoon.org/doc/68146/

- https://indiankanoon.org/doc/1041742/

- https://indiankanoon.org/doc/857209/

- https://indiankanoon.org/doc/1317063/

- https://indiankanoon.org/doc/1038145/tps://indiankanoon.org/doc/1827979/

- Matt Novak Viral Video Of Actress Rashmika Mandanna Actually AI Deepfake, Forbes, (Nov 5, 2023,03:09pm EST) https://www.forbes.com/sites/mattnovak/2023/11/05/viral-video-of-actress-rashmika-mandanna-actually-ai-deepfake/?sh=6f783dc57232

- https://indiankanoon.org/doc/1038145/

- Aditi Agrawal, IT Ministry summons social media companies over deepfakes, Hindustan Times, (Nov 21, 2023 01:14 PM IST)

- The Personal Data Protection Bill, 2019, https://prsindia.org/billtrack/the-personal-data-protection-bill-2019

- Radhika Singhal, Artificial Intelligence Posing a Threat to the Natural Right: A study on how the government is using it in the ambit of national security, Artificial Intelligence and Law, 235,237(2021)

- https://indiankanoon.org/doc/121790054/#:~:text=66D.%20Punishment%20for%20cheating%20by%20personation%20by%20using,fine%20which%20may%20extend%20to%20one%20lakh%20rupees.

- MeitY issues advisory to all intermediaries to comply with existing IT rules.

- Section 66F of the Information Technology Act, 2000

- The Information Technology (Intermediary Guidelines and Digital Media Ethics Code) Rules, 2021,

- https://indiankanoon.org/doc/786750/

- https://indiankanoon.org/doc/1641007/

- https://indiankanoon.org/doc/1199182/

- Justice K.S.Puttaswamy(Retd) vs Union Of India, AIR 2018 SC (SUPP) 1841 (India)

- Online Safety Act 2023

- https://www.legislation.gov.uk/ukpga/2023/50/contents/enacted

- AARATRIKA BHAUMIK, Regulating deepfakes and generative AI in India | Explained, The Hindu, (December 04, 2023 06:57 pm | Updated 08:14 pm IST)

- H.R. 5586: DEEPFAKES Accountability Act, https://www.govtrack.us/congress/bills/118/hr5586/text

- AARATRIKA BHAUMIK, Regulating deepfakes and generative AI in India | Explained, The Hindu, (December 04, 2023 06:57 pm | Updated 08:14 pm IST) https://www.thehindu.com/news/national/regulating-deepfakes-generative-ai-in-india-explained/article67591640.ece

- Bletchley Declaration, https://www.insightsonindia.com/2023/11/03/bletchley-declaration/

- Harshvardhan Mudgal, The deep fake dilemma: Detection and decree, Bar and Bench, ( 18 Nov 2023, 8:14 pm)

- https://www.barandbench.com/columns/deepfake-dilemma-detection-and-desirability

- AKSHAY TANNA vs JOHN DOE & ORS., High Court of Delhi, 05th February, 2024, CS(COMM) 92/2024 & I.A. 2257/2024

- Bananaip Reporter, USE OF AKSHAY TANNA’S PERSONA AND REPUTATION ON SOCIAL MEDIA FOR INVESTMENT SCAMS TAKEN DOWN, Bananaip, (FEBRUARY 8, 2024)